ChatGPT is getting more guardrails after the tragic death of a teen whose parents say died by suicide after communicating with the cutting-edge chatbot.

OpenAI, the maker of ChatGPT, said in a blog post on Tuesday that the service will introduce parental controls next month that will allow parents to set limits for how their teens use its product. Parents can also opt to receive notifications from ChatGPT if it detects children are in “acute distress.”

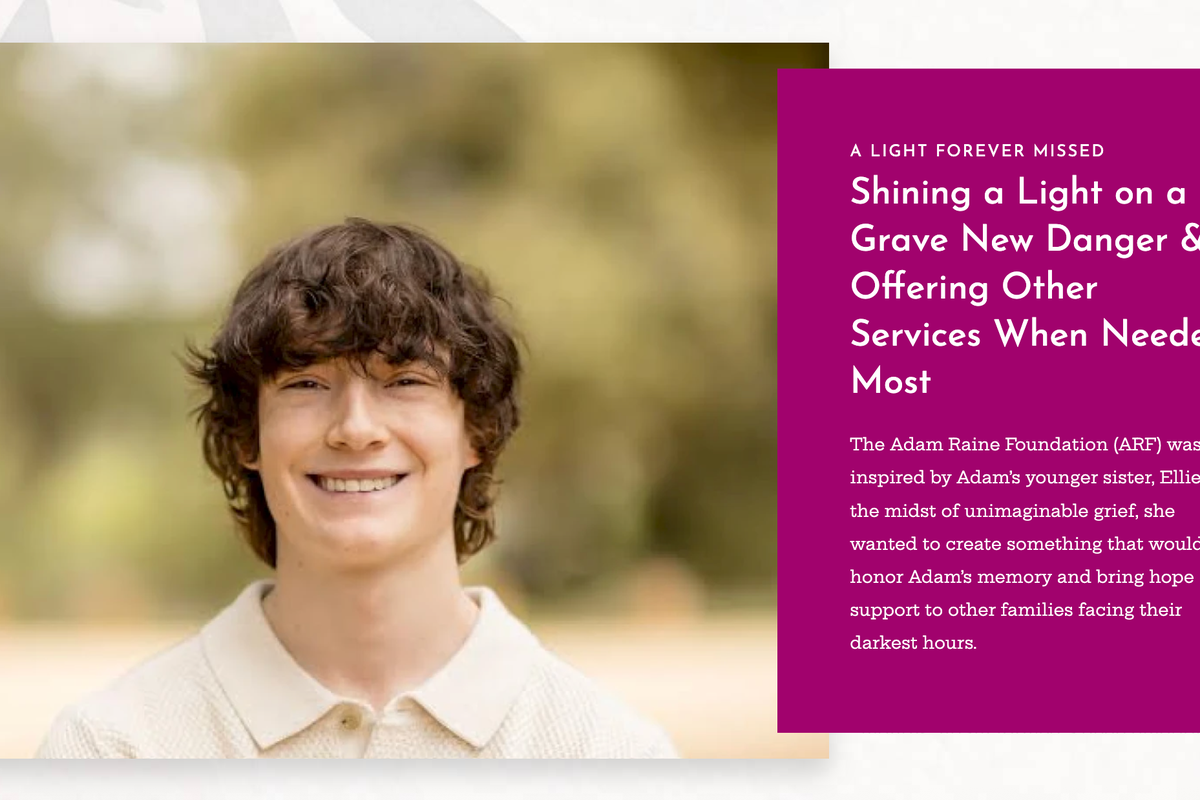

The company said in its post that it has been working on the controls since earlier this year — an indirect way of suggesting the changes are not a direct response to the April death of 16-year-old Adam Raine.

Mental health professionals have been vocal in their concerns about how people are using and reacting to ChatGPT and other Large Language Model AI products. ChatGPT felt the need to make its 5.0 Chatbot less “sycophantic” after a slew of reports suggests some users were slipping into psychosis after following the LLM down delusional rabbit holes.

As promised, 5.0 was released and was less cloying, less complimentary, less personal, and more direct in its output. A backlash from users who missed their previously doting chatbots convinced OpenAI to allow those users to choose the old version of the LLM instead of its new product, though that feature is being phased out this fall.

Now OpenAI is attempting to course correct again, but Jay Edelson, an attorney representing the Raine family, says its too little, and too “vague.”

“Rather than take emergency action to pull a known dangerous product offline, OpenAI made vague promises to do better,” he said in a statement to the Washington Post on Tuesday.

Adam, of Rancho Santa Margarita, California, died on April 11. His parents, Matt and Maria Raine, spent 10 days poring over thousands of messages he shared with ChatGPT between September 1 last year and his death.

They were horrified by what they found — their son had been talking with the chatbot about ending his life for months. The family is now suing the developer behind ChatGPT, Open AI, and its CEO Sam Altman, in a wrongful death lawsuit filed in California Superior Court in San Francisco.

In a statement, Open AI confirmed the accuracy of the chatlogs between ChatGPT and Adam, but said that they did not include the “full context” of the chatbot’s responses.

While ChatGPT is the most recognizable of the AI-powered chatbots, the troubling attachments that occur in some users is not exclusive to OpenAI’s product.

In October, a woman in Florida sued another chatbot app, Character.ai, alleging its app — which takes on personas of original characters or characters inspired by famous IPs — was responsible for the death of her 14-year-old son.

In that case, her son had reportedly grown emotionally attached to a chatbot portraying Daenerys Targaryen from the “A Song of Ice and Fire” books and “Game of Thrones” television show, and eventually died by suicide after long chats with the character.

Two months after the lawsuit was filed, Character.ai added new parental controls to its app.

Last week, Common Sense Media released a study showing that Meta’s AI chatbots would, if prompted, coach teen users on suicide, self-harm, and eating disorders. Meta said in a statement that the incidents described in the test breached its content rules and that it was addressing the issues to make sure its teen users were better protected.

In its Tuesday blog post, OpenAI described its teen users as the first “AI natives” who are growing up with the products as a normal part of their lives.

“That creates real opportunities for support, learning, and creativity, but it also means families and teens may need support in setting healthy guidelines that fit a teen’s unique stage of development,” the company said.

OpenAI’s terms of service prohibit anyone under the age of 13 from using the Chatbot.

The company said that its new parental tools will let parents link their accounts to their teen’s accounts, where they will have access to fine-tune what their children can access while using the chatbot.

If you or someone you know needs mental health assistance right now, call or text 988, or visit 988lifeline.org to access online chat from the 988 Suicide and Crisis Lifeline. This is a free, confidential crisis hotline that is available to everyone 24 hours a day, seven days a week. If you’re in the UK, you can speak to the Samaritans, in confidence, on 116 123 (UK and ROI), email jo@samaritans.org, or visit the Samaritans website to find details of your nearest branch. If you are in another country, you can go to www.befrienders.org to find a helpline near you.